My intention is not to write a self-promoting article; I merely want to share my reflections, needs, and frustrations in using inadequate tools that led me to make one of my own that was able to do what I needed. A tool that not only shows data but processes them with advanced algorithms to transform them into valuable information that allows us to act concretely and garner immediate results.

How to discover your website’s SEO potential

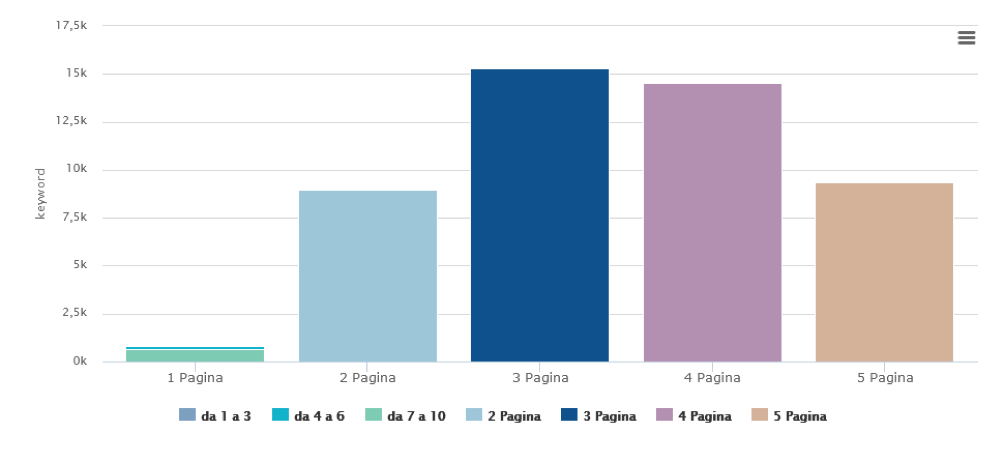

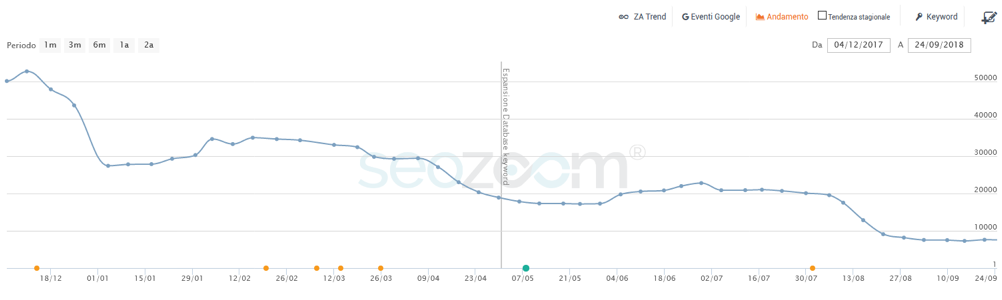

Every website, even the one we could deem the worst due to the fact it doesn't get search engine traffic, also has untapped potential. There are so many opportunities to increase traffic we cannot seize because of technical SEO issues or content not entirely focused on users' needs, like the one depicted in the graph below:

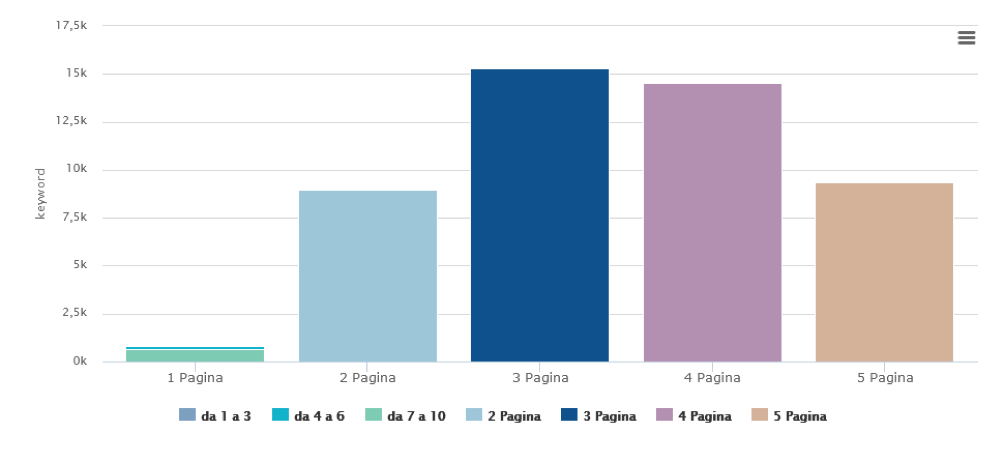

In this graph, here's a website with tens of thousands of potential keywords, but few of them rank in the top ten.

In twelve years of work as an SEO consultant, I have always given a prominent role to the study of the potential of a new client’s website in my preliminary analysis.

Companies that had not even worked on their website content contacted me and asked me to do it for them. I've never wanted to acquire that type of client because they act as if they're going to a nutritionist and ask them to not eat for you.

However, in most other cases, the client had already worked hard on producing valuable information and rich content they had passionately written. They did not center on the most important point that comes from answering a few simple questions:

- "Who am I writing this for?"

- "How would I look for what I'm writing if I were a Google user?"

- "Will Google understand that I'm answering a specific user question?"

This client produces content, typically not for SEO purposes and tends to write through passion and feels satisfied when clicking the "publish" button on their CMS after having put all their heart and brain power into the topic. After a few days, they realize they haven't had any visits.

This kind of client is the one I love; passionate people or companies who get involved but lack a "coach," who do not waste their enormous potential. When we find a customer working on their website, and we have good potential to start with, the results are 100% guaranteed.

How to analyze a website’s potential

I did this job manually for years by finding the keywords from the high volume of research (or they could convert more into leads or sales), so the website was on the second or third page of Google SERPs.

That way was arduous and did not bear very much fruit. Here's why:

- Starting from the best keywords, I had to identify the URLs to work on to get traffic

- I had to decide if it was worth changing the page or not. It was likely that the chosen URL already had traffic on other keywords, and it was possible that modifying the content would have risked losing it.

- Before modifying the content, it was necessary to analyze the Google SERP to understand if our page’s text of our page was in focus with the other content Google chose for the SERP. This allowed us to know if we needed to rewrite the page entirely or whether we just had to make a few improvements.

- We also needed to try to what other relevant keywords I could place in the same content by putting them in the text, so I had to analyze the competitors’ pages to identify their relevant keywords.

- As we all know, Google does not always respond with the best result; it often has to react with the "least bad" one. This was vital information for me, and knowing I would be competing with scarce competitors on a topic was a huge competitive advantage.

As you can imagine, based on the complexity and the amount of the information I needed to evaluate each URL, I had two options:

- Leave this work or

- Create an automated tool that could do it all on its own.

This dilemma gave me the idea of creating SEOZoom, a suite of SEO tools with a distinct approach from any other suite out there. I wanted an "opportunity-based" mechanism that would help me be actionable.

Here’s a summary of the approach, but remember that this article is not about SEOZoom, but instead the philosophy that led me to create it and automate all the work.

What do users want?

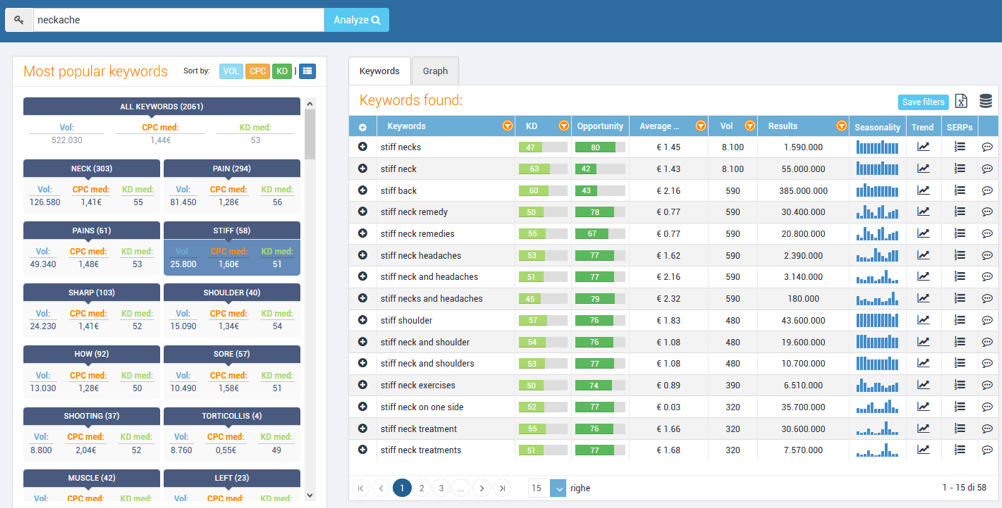

I needed a tool to help me understand all the ways a user could search for the same thing on Google, so I created an algorithm to identify the Search Intent.

It reverse-engineers Google's SERPs and manages to identify all the keywords that can be considered relevant to a specific topic.

With the first step of studying the potential done, I could understand if my webpages were relevant for a topic and furthermore if I had deeply analyzed the question enough by looking at other vital topics that competitors had considered.

The classic Rank Trackers keyword/position approach was inadequate and of little help.

Analyzing entire domains just on grids (in Excel style) with keywords, search volume, position, and URLs was impossible, and would have taken months for every individual website.

I wondered how it was possible that any existing tool worked only in this way; I couldn’t fathom how every page was ranking on ALL of its keywords in terms of organic traffic.

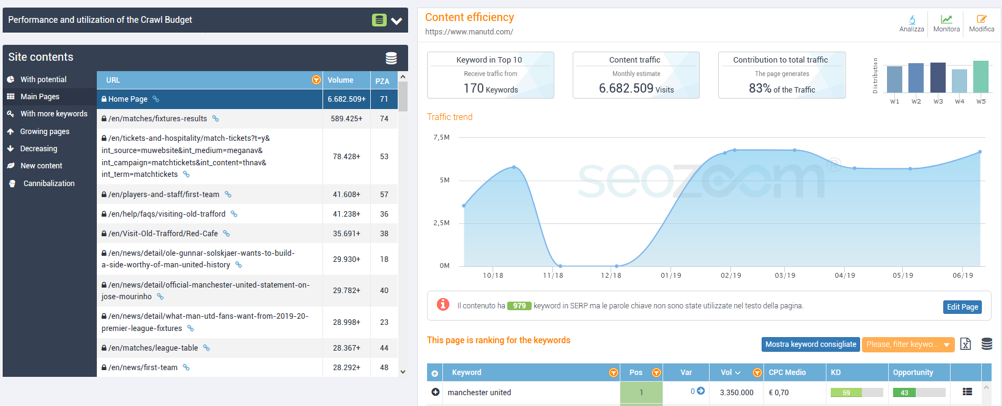

So, I came up with a new idea of creating the first Rank Tracker based on URLs and not on keywords; it was something that didn't exist on the SEO Suite market. Finally, I could automatically know for a page of a site how many keywords and which were positioned, what keywords brought them traffic, and the potential keywords I could work on. Not only that; I could also determine the page's performance over time.

SEOZoom’s "page" approach also served as inspiration for other international tools because a year after our release, they have adapted their tools to offer a similar solution because it could provide a different view of the performance of every web page.

What do SEOs want? The full vision!

I have always been very critical of my work, and while I was satisfied with the page tracking, I realized it could be much more useful to a copywriter than an SEO like me. It's convenient for a copywriter to know on what page they make improvements, but as an SEO, I needed sufficient information to carry out global actions on full sections on the website. What I needed was a "better overview."

I needed to divide my website’s URLs and organize them based on the traffic volume and create content analysis algorithms to get the following:

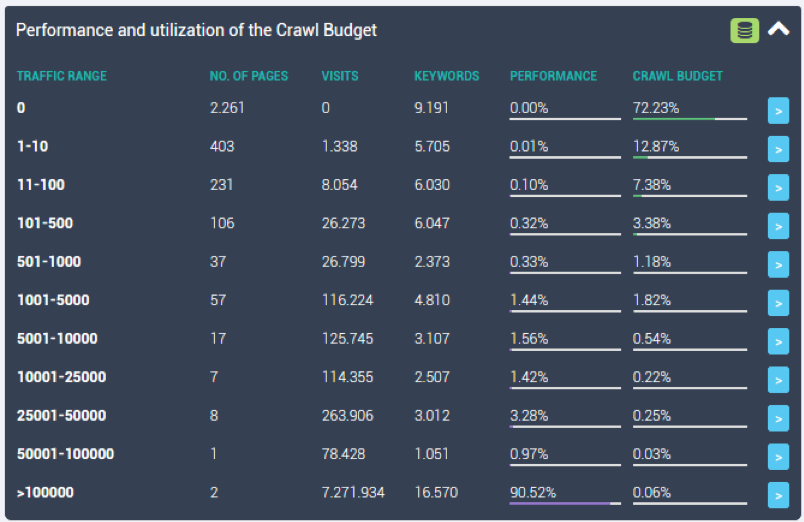

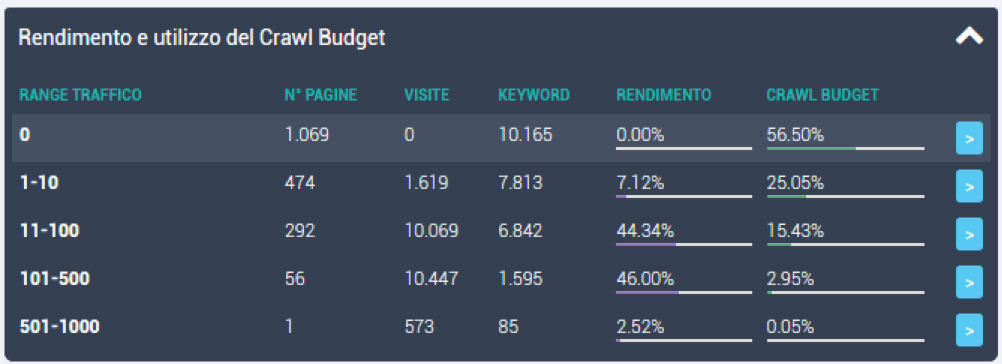

1. Find how many and what pages waste budget crawl by bringing zero traffic to the site.

In many cases, I discovered that 90% of the budget crawl was wasted on pages that didn't contribute to site traffic. The various SEO spiders show us our pages’ technical problems, but no spider told me what pages useful and which ones were a complete waste of bytes.

2. Understand how stable my website’s rankings were.

In many cases, we only have a handful of pages that carry all the traffic and thousands and thousands of other pages that are a mere waste of budget crawls. Our traffic is at risk in these circumstances because if the rankings of one of these web pages tanks, we could lose everything. We have to act immediately to stabilize our SERP presence by strengthening content.

As we can see in the graphic above, 0.06% of the website’s pages produce 90.52% of the website traffic. We can tell in cases like this one that our global traffic is at risk.

3. Determine if groups of pages are competing for the same keywords by identifying potential cannibalizations.

When we have been working on a website for years, it is often likely that we find ourselves working with a topic we have previously worked on content.

Unless you have been meticulously managing your content production and have been trying not to overlap your old and new pieces of content, you’ll run the risk of going into cannibalization problems, or that you’ll have multiple web pages competing for the same keywords.

In these cases, one of the two pages will trump the other, becoming a burden for Google because it is forced to scan it and ends up wasting budget crawl.

Let's move on to the action!

Thanks to the tools I had created, I could have an overview of the site's pages in an instant, but I needed a final piece to create the perfect solution for my needs.

For each problematic web page, I needed to know precisely how to improve it to make it more competitive and fill the content gaps compared to the competitors so I created new algorithms to automate the work I would have done manually up until then.

Here’s the process I went through to figure out what I needed to do on every web page:

1. I had to analyze my web page’s content to a qualitative and quantitative evaluation by comparing it to competitors’ content on the SERP.

2. I had to understand why my URL was ranking on Google’s second page for many keywords or not ranking at all for others, while the competitors’ pages were.

To mitigate this problem, I decided to create an algorithm to identify what I called the intent gap to identify potential and highly relevant keywords for a URL by reverse-engineering my competitors' Google results.

3. I had to understand what my competitors had done on their pages. I needed to gather information about their organic traffic, keywords, what topics they had covered on their page, but above all, what they had done more of and better than me.

Processing competitors’ content and keywords they ranked for allowed me to figure out what my pages were missing, what keywords slipped passed me, and what topics my content had omitted.

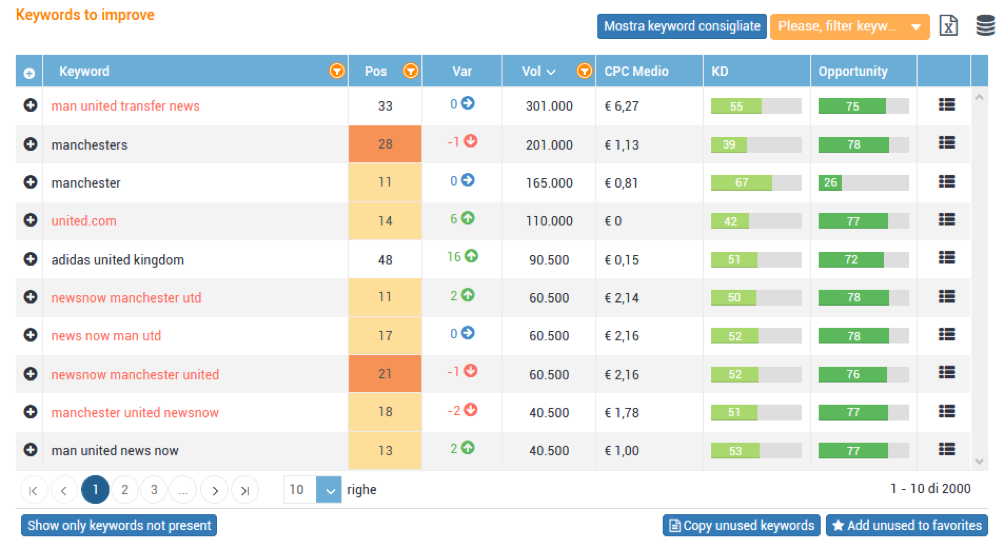

In red, I highlighted all the keywords for which my web page was almost in Top10, but which had never been used in the text.

The importance of an excellent Editorial Assistant

My last wish was to go through the whole process that I have described up to now, click a button, and open an HTML editor that would help me do everything I had identified guiding me step-by-step showing me the information from the web pages I was going to compete with.

I wanted to find out the following information about my competitors:

- What topics they had covered;

- What keywords they ranked;

- How they used the TITLE tags;

- What they had put in H1, H2, H3;

- Which internal links they used;

- Take inspiration by seeing the phrases used for each topic;

- Get an immediate idea of the potential search volume I was competing for with my article. I also wanted this possible volume to be updated in real-time while I modified the text enriching it with topics and keywords.

This time I got to work, and with the help of my team, I created the first editorial assistant three years ago. It came about out of personal need, and today, 22,000 companies in Italy are using it. Other tools have started to offer similar products since then.

I've broken down the entire behind-the-scenes process of the creation of my SEO suite. I am sure that this whole process with the rationale and highlighting of some of the fundamental needs at the heart of its inception can be of great help for having a different approach to SEO that we often see as a solution to merely technical problems.

A case study where I applied this methodology

The almeglio.it website continued to lose traffic despite the constant production of new content. Maybe it was because there was something wrong with how they were choosing new articles or that the old pages were gradually losing traffic, or perhaps it was a combination of the two.

The website was causing a waste of almost 90% of the budget crawl to produce zero organic traffic; the solution was to intervene in the most promising content to increase traffic with the web pages we already had available.

Thanks to content analysis, I understood what pages were the most appropriate for us to fill the apparent intent gap.

I managed to gain all the traffic lost over time by selectively working on the pages with more significant potential by modifying the text to ensure it was more topically relevant.

As you can see from the image above, after the traffic drop, we intervened in the content, allowing us to bring the organic traffic back to previous levels.

We did this on about 50 pages and took about a week, but the result from it was satisfying. The few modified pages occupy about 3% of the budget crawl but, after the intervention, they produce about 46% of the site's global traffic, which you can see in the second-to-last-line in the image below.

The site's global traffic has finally returned to adequate traffic volumes for the type of website and amount of content produced.

The site's global traffic has finally returned to adequate traffic volumes for the type of website and amount of content produced.

Modern SEO is so many things, so many disciplines, but as I always say, it is more of a global vision!

Like Neo in The Matrix, you can defeat the enemy only when your eyes can see your website clearly in its entirety, in its essence.